Understanding how viewers focus on visual material has become a central part of research in design, media studies, product feedback, safety testing and digital behaviour patterns. Today, the process of tracking attention is no longer slow or limited to lab settings. Modern systems rely on advanced computation, sensors and pattern analysis to map attention with remarkable accuracy. This shift has created new ways to study how people interact with screens, packaging, interfaces and real environments.

Below is a detailed look at how these systems work, why they are so fast and what powers their ability to study human focus in real time. The term ai eye tracking will appear naturally in relevant sections and will not be repeated beyond the required limit.

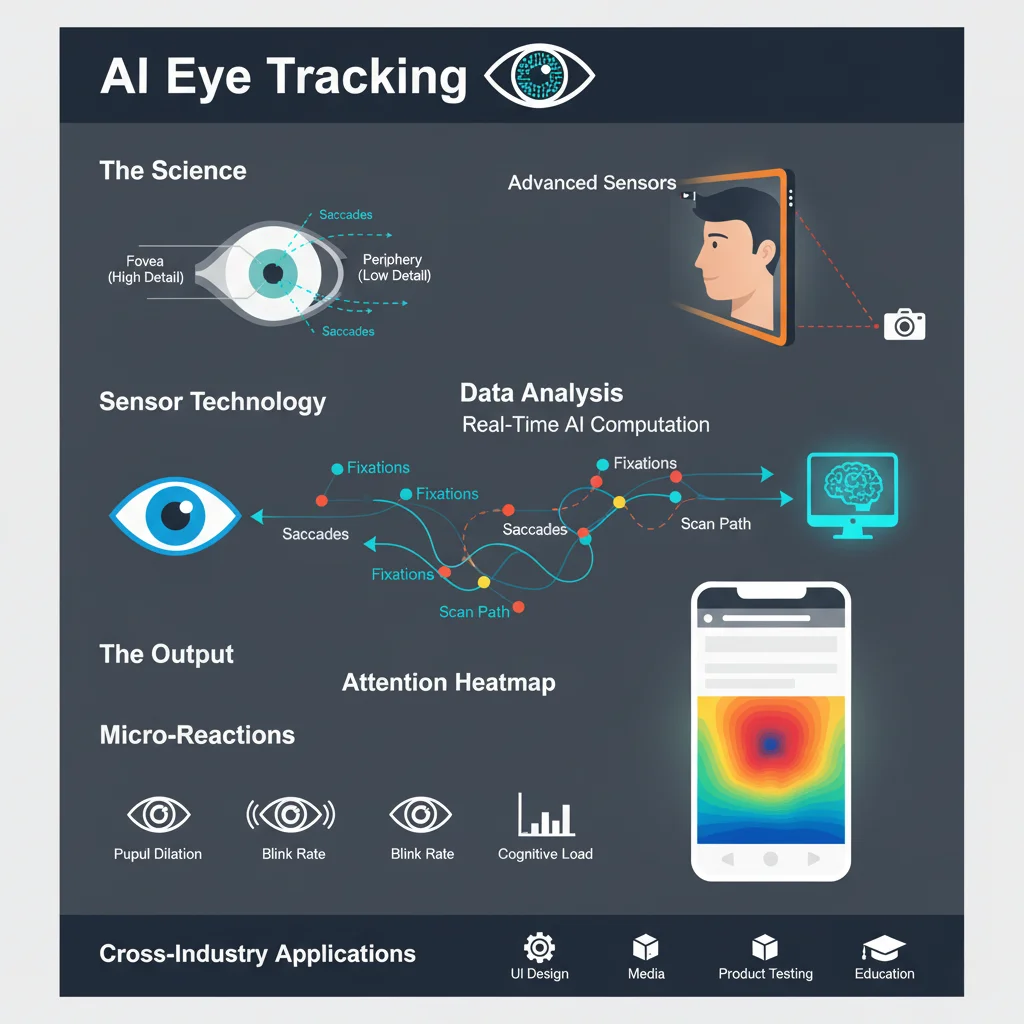

The Science Behind Human Attention

Before looking at the technology, it helps to understand what attention means in a scientific sense. Human vision is complex and layered. Only a small central part of the visual field, called the fovea, produces sharp detail. Everything around it is softer and less precise. Because of this, the eyes constantly move in tiny shifts known as saccades. These movements help the brain collect information from different points in the scene.

Attention is therefore a combination of where the eyes move and what the brain selects as important. Tracking attention means mapping these movements and understanding the timing of each shift.

How Modern Sensors Capture Eye Movements

Systems that decode attention begin with advanced sensing. Cameras or infrared sensors typically record reflections from the cornea and pupil. These sensors do not interfere with vision; instead, they detect tiny directional changes as the viewer looks around. With the integration of AI eye tracking, these systems can now interpret these micro-movements with far greater accuracy.

High frame rates allow the technology to capture movements that last only a fraction of a second. Since saccades occur multiple times each second, this speed is essential. Modern sensors often support capture rates far above those used in early research tools. Combined with AI-driven analysis, this makes it possible to observe attention as it shifts across a scene or screen without losing detail.

The Role of Computational Models

Collecting eye movement data is only one part of the process. The main task is interpreting what those movements mean. This is where computational models come in. Systems use mathematical rules to identify patterns in gaze direction and gaze duration. These patterns reveal what the viewer is drawn to and how long each visual area holds their interest.

Models often examine several factors:

• Fixations: moments when the eyes rest on a point

• Saccades: quick shifts from one point to another

• Scan paths: sequences of movements that show how attention flows

• Dwell time: how long a region remains of interest

By placing these elements together, the system forms a complete picture of how attention behaves across the entire viewing period.

Why Results Are Generated So Quickly

The main reason modern tools decode attention within seconds is the combination of real time sensing and efficient computation. Machine learning methods allow systems to process hundreds of data points at each moment. Instead of waiting for post analysis, they classify movements instantly.

These models use large sets of training data from prior studies. Over time, this helps them identify common gaze patterns with high accuracy. When a new viewer looks at a scene, the system compares their behavior to this learned structure. The result is immediate feedback about what captures attention and what is overlooked.

Heatmaps and Attention Maps

One of the most popular outputs in viewer attention studies is the heatmap. This visual representation uses gradients to show which areas received the longest gaze time. Warmer colors mark high interest regions. Cooler colors indicate areas with limited engagement.

Attention maps provide a simple way to translate complex movement data into visuals that are easy to understand. Researchers and designers can review which parts of an image attract early focus, which receive sustained attention and which are barely noticed.

These maps also help clarify how different demographic groups respond to the same material. Since no two people see a scene in the same way, comparing heatmaps reveals patterns that might not be obvious without this visual summary.

Understanding Micro Reactions

Modern systems do more than record gaze direction. They also detect subtle changes in pupil size, blink rates and micro expressions. These small reactions often signal emotional responses or moments of cognitive load.

For instance:

• Enlarged pupils may signal mental effort

• Rapid blinking may appear when a viewer processes new information

• Slower blinking can indicate relaxed engagement

While these signals do not directly decode emotions, they offer clues about how the viewer experiences the material in front of them. This level of detail is one reason attention analysis has expanded across research fields.

Applications Across Industries

Viewer attention studies support several real world uses:

• User interface design

• Media content review

• Product testing

• Safety instructions and warning label assessment

• Educational material design

• Navigation studies in both virtual and real spaces

In each case, attention analysis helps teams understand if the material is clear, if crucial elements are noticed quickly and if arrangement supports the intended experience.

Systems that use ai eye tracking also support testing with remote participants, making research accessible beyond physical labs. This allows wider data collection from varied viewer groups.

The Importance of Context

Attention does not function the same way in every scenario. Lighting, familiarity, emotional state and task instructions all influence gaze patterns. Because of this, modern systems consider context as a key part of analysis.

Researchers usually set clear goals before running a session. They might ask whether the viewer is searching for something, learning something or reacting naturally without guidance. Each context produces unique gaze paths.

Understanding these variations helps avoid misinterpretation. A viewer who scans quickly may not be distracted. They might simply understand the material well. A viewer who stares at one point for too long may not be deeply interested. They might be confused. Context holds the key to accurate conclusions.

Ethical Considerations

As tracking methods become more capable, protecting viewer privacy becomes essential. Researchers must ensure that data is collected with consent and used responsibly. Systems should avoid storing identifiable information unless necessary for the study.

It is also important to clarify that attention tracking does not read thoughts. It observes visual focus, not personal beliefs or private memories. Communicating this clearly helps viewers feel comfortable during participation.

Conclusion

Modern attention decoding systems bring together fast sensors, advanced models and real time computation to understand how people interact with visual material. These tools map eye movements, interpret patterns, highlight focus points and reveal moments of interest within seconds. By combining movement data with contextual factors, they give a clear picture of how viewers receive information and navigate visual scenes. Their value lies in clarity, speed and the ability to support research across several fields.

FAQs

1. How accurate are attention decoding systems?

They are highly precise at detecting movement and fixation points. However, interpreting these patterns still requires human judgment and an understanding of the study context.

2. Do these systems work with natural lighting?

Yes. Most systems track reflections using infrared or high sensitivity cameras, which function well in regular indoor settings.

3. Can attention tracking detect emotions?

Not directly. It records physical signals like pupil shifts or blink rates. These may relate to emotional states but do not provide definitive emotional labels.

4. Is special equipment needed?

Many systems rely on built in or external cameras paired with computational models. Some setups use dedicated devices, while others work through standard hardware depending on the accuracy required.

5. Does attention tracking affect the viewer’s performance?

No. The process is passive and does not interfere with natural viewing behavior. It simply records visual patterns as they occur.